Tech Setup for the Reckoner East Asia Tour

Posted Jun 19, 2024

Tags: music, gear, production

Between May 25th and June 7th, I played 7 Reckoner shows across 4 cities on a tour through Mainland China, Hong Kong and Thailand. All travel between cities except one was by flight; a total of 6 including transfers. This is a quick post on the gear and equipment I carried with me, my setup for those performances, and optimizations I had to make for travel.

The central impetus for this trip was attending the International Conference on Live Coding (ICLC) in Shanghai, where I was scheduled to perform a concert piece. Making music on my computer was thus a crucial aspect of how I planned on performing.

I would have loved to carry my guitar, but it made little sense to carry anything besides my computer on flights given how many of them I had to get on. Still, I decided to carry my noise-synth Lyra 8 along. Despite the hassle, I think it made the shows very enjoyable.

A lot of this post is about open source tools and extensions that I duct-taped together to suit my needs. I wanted to keep the setup simple enough that I could reason about them in unfamiliar/chaotic environments, and reduce the number of points of failure. I also attempted to make the workflow as deterministic as possible to minimize the amount of time required at soundcheck. This allowed me to test and arrange things out on headphones long before it was time to go to the venue.

TidalCycles and SuperCollider

TidalCycles (or Tidal for short) has been at the core of my live act for the better part of last five years. It is a patterning engine that works in conjunction with SuperCollider, an algorithmic sound engine, to enable live coding music. I use this system, along with its varied synthesis and sampling capabilities to play Reckoner songs live. Most of this code is documented/archived here.

My performance at ICLC, titled “Really Useful Engines”, was centered around train motifs. For this, I built up a sample collection of subway announcements from New York City (something that I’d already recorded years ago for my track "An Evening Ritual"), as well as the acapella sample and announcement recordings from the french SNCF system (partially recorded during my visit to France last year, and some samples pulled from youtube for higher quality).

For other tracks, I relied extensively on a collection of glitch and drum samples I’ve been using for nearly a decade in Ableton. I no longer remember where I got them from, but they continue to serve me well. SuperDirt (a SuperCollider module) also ships with a great collection of 808 samples that I reach for regularly. Keeping drum samples consistent through varying arrangements in the set has served me well in establishing coherence and a kind of sonic signature.

For custom sample organization, I abandoned the idea of adding them into default dirt-samples directory long ago, and have since been loading custom samples from my /samples directory into SuperCollider. I don't use a startup script, since my setup in SC tends to vary in little ways for each performance.

~dirt.loadSoundFiles("/Users/sumanth/Music/samples/*");

During my past performances in 2022 and 2023, I noticed SuperCollider quickly running out of audio headroom as I crammed more and more layers in my music. I tried getting Geikha’s soundgoodizer port for SuperCollider to work, but had no luck. SuperDirtMixer led to a similar dead end, possibly due to incompatibilty with my ARM chip. I hence use a dedicated audio interface instead of standard headphone out. This simply offers a more powerful amplifier to work with. I now set all my Tidal orbit levels pretty low, and turn the gain up in the interface.

Text Management

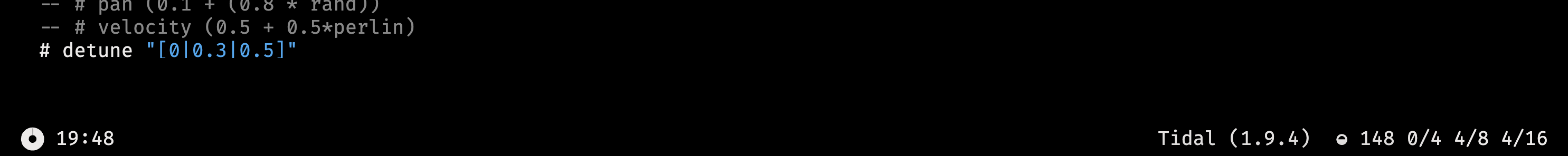

Atom was my editor of choice for live coding but since its sunset last year, I have slowly shifted to Text Management. Currently this is a Tidal-only text editor with great utilities for live performances. I swear by the cycle logs on the bottom right, as well as the tidal mini-notation highlights while executing a pattern. The editor also comes with a little timer that’s useful for improvisational live sets with a hard time limit (as is often the case with algoraves).

Text Management allows for adding several custom haskell files into the boot loader, which has resulted in better organization of my Tidal utilities. This also keeps me from messing with my default BootTidal.hs file, ensuring things don’t break unexpectedly. Text Management is currently out as a beta release, and is under active development!

Audio Interface and Microphone Setup

The microphone setup was straightforward – an SM58 going into my Focusrite 2i4 interface. I did notice occasional memory exceptions on SuperCollider when attempting to start it up with the interface set as the default device. My cope for this has been to bump the memory allocation high enough to make the errors stop.

s.options.memSize = 65536;

Server.default.options.outDevice_("Scarlett 2i4 USB")

Server.default.options.inDevice_("Scarlett 2i4 USB")

In order to be able to sing over live coded instrumentals, I set up a bypass orbit that's mixed slightly louder than everything else. SuperDirt (the audio engine behind Tidal) already offers the \in for live audio input. As a redundancy, I wrote a similar synthdef called \input to achieve the same thing. I personally use the latter more often, since it allows me to debug and set volumes on the fly if things don’t sound right.

Having the vocal track setup as a synthdef also offers the advantage of running it through all the effects that Tidal offers. I use this quite often to filter my vocals and apply a certain amount of reverb and delay depending on the venue and arrangement. In future I want to experiment with building a programmable vocoder on top of this.

Tidal Looper

TidalLooper is a module in SuperCollider that allows real-time recording of audio input, and presents it as a sample to TidalCycles. It offers 8 buffers (0-7) that you can write into and then use for playback.

Once available in a buffer, the vocal samples can be played back in pretty much the same way as any sample on Tidal. I use TidalLooper for breakbeat-style chopping and resampling in the outro of a WIP track called “Afraid To Try”, and build an elaborate staccato choir-of-one in “Rare Cares”. There were a few quantization quirks that needed to be accounted for, as well as the need to clear all buffers between consecutive songs with fresh looping/sampling. This required writing a custom recorder utility.

Having ready access to vocals makes it easy for me to lean into my proclivities for songwriting even in the context of computer music. Over the course of the tour, this increasingly became the centerpiece of my act and a big feature of what I do differently with live coded music.

Mutable Instruments

Recently, I discovered Volker Böhm's port of many Mutable Instruments synths into Supercollider u-gens along with Tidal and SuperDirt definitions to access them. This helped me get past my ongoing gripe around the lack of good sound design tools on Tidal. The u-gens quite closely match the original synths to the point where the manuals work as great guides!

For the tour, I replaced my usage of the default Tidal reverb functions entirely with the MI verb effect, which offers a richer sound with more configurable parameters.

I’ve also come to heavily rely on the clouds delay unit, which offers simple and granular delays. Adding this to vocals was a no-brainer, but the effect has also come in handy when attempting to build long sustains and pads (something that Tidal has always been uncooperative with). Using cloud in combination with supersaw was a key ingredient in breathing new life into “Monsters Under Your Bed”.

I’m still exploring braids and plaits. Both are fascinating synths with seemingly endless configurations that can be parameterized and patterned over time. Currently, I find myself reaching for the more abrasive and resonant tones, since they work really well for the tracks I’m working on. The former is also an excellent bass synth when tuned correctly and used in conjunction with mu (a low-frequency distortion effect). I want to spend more time studying them in the coming months.

Lyra-8

I carried my Lyra for just one track, really - “An Evening Ritual”. In the latter shows, I began using it for gestural and improvisational bits in “Monsters..” as well as a new track called “[async]”.

There’s not a whole lot to explain in terms of its usage, given its simultaneous transparency and unpredictability. It introduced substantial variation in the music each night, creating a delightful balance between the composed/encoded and improvised parts of my set. Additionally, Lyra’s distortion circuit has a limiting functionality built-in, which is great when you want to get it to scream in self-oscillation without threatening the sound system.

I carried the Lyra in my backpack along with my laptop, which made security checks at the airport a little cumbersome but gave me peace of mind while flying. It helps that my synth is bright orange, and has the word “synthesizer” printed on it, making me look less suspicious when I pull out an obscure electronic device at the airport.

(Security check at JFK)

Problems

Given the variability in living arrangements, transport and venue facilities over the course of the tour, I relied extensively on checklists and modular packing. This helped with quick setup and teardown at venues, and achieving reasonable certainty that I had everything I needed for each show. Still, I did run into a moment of panic when I found myself locked out of my checked luggage containing everything.

During soundcheck, the only unrelenting challenge was balancing mic input levels, TidalLooper gain and feedback from the monitors. This involved a lot of trial and error with varying degrees of success at each venue. Often, I had a hand on the knob throughout the set as I stayed vigilant about feedback.

There were also occasional problems with getting SuperCollider to detect my audio interface without plunging into memory exceptions. I suspect it has to do with one of the USB-C ports on my laptop.

I also carried a Zoom H4n for recording all my sets, which turned out to be a futile effort given the device’s lack of gain staging. As a result, most of my recordings clip and are pretty much unusable. I did manage to record some of my sets using SuperCollider’s built-in record function, which captured all sounds made by my computer (thus not including sounds from the Lyra).

(Before my set at Unformat Studios, Bangkok)

Overall, I think the processes and toolchain served me fairly well for this tour. I would hold on to this setup moving forward, given how tightly it is coupled with the tracks I’m currently working on. If I had to do this again, I would probably make the following changes:

- Carry a MIDI-to-CVGate converter that allows me to tether the Lyra to the computer

- Consider adding some kind of dynamic range compression to the final output as a means of taming sounds

- Setup some basic OSC functions on Tidal that allows for sending data to a visual artist over network

- Get a more configurable recorder, and set aside time for gain staging it at each venue

- Find ways to reduce my desktop footprint - maybe get a laptop riser

- Sew some slots and patches into my bag to organize everything better, specifically optimized for easy packing/unpacking at the airport security

- Figure out why Mutable Instruments Ripples won’t load, and fix that

- Carry a portable LED light that illuminates my very-not-white face during performances

Previous

← My 2023 Integrity ReportNext

Fate Tectonics →